Mastering Golang Performance: How Heap and Stack Impact App Efficiency

Unlock the full potential of your Go applications by mastering heap and stack memory usage. Learn practical tips for optimizing performance, reducing allocations, and scaling efficiently. Discover when to use stack vs heap, and implement advanced techniques for lightning-fast Go programs.

Go, or Golang, is known for its speed and efficiency. But to truly master Go performance, you need to understand how memory works. This guide will help you optimize your Go programs by exploring the heap and stack.

Understanding Go's Memory Model

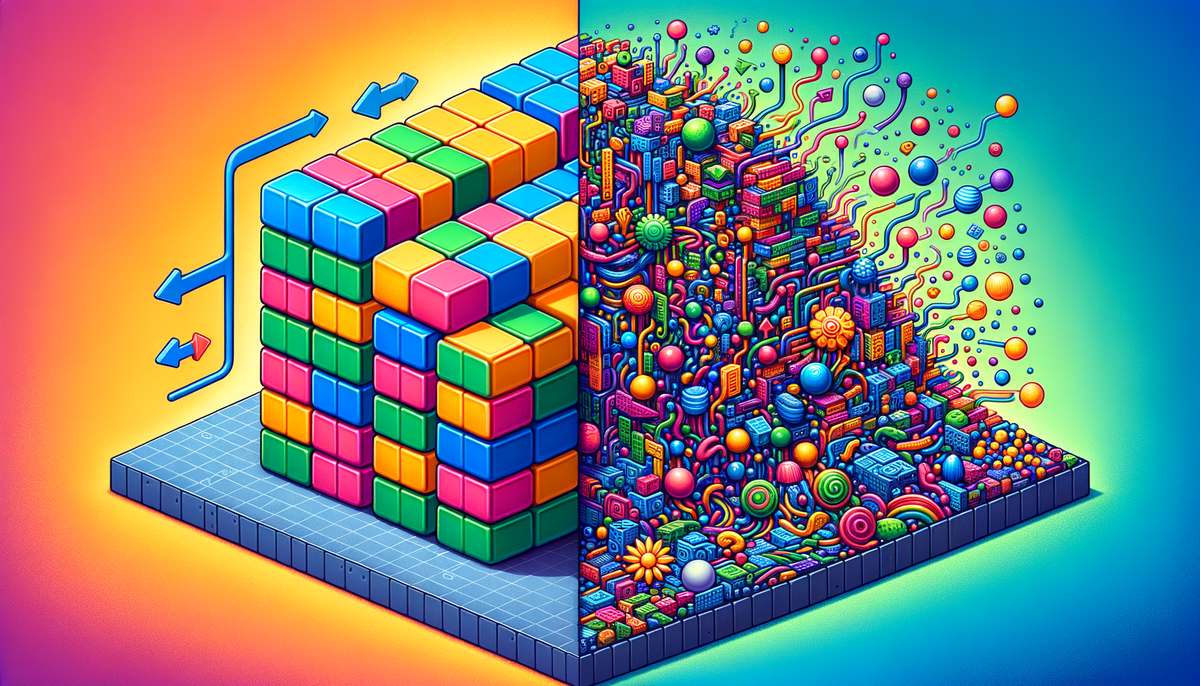

Go uses two main areas of memory: the stack and the heap. Let's break them down:

The Stack

- Fast and automatically managed

- Stores local variables

- Limited in size (typically a few MB)

- Each goroutine has its own stack

- LIFO (Last In, First Out) structure

The Heap

- Larger but slower than the stack

- Stores variables that outlive function calls

- Managed by the garbage collector

- Shared across goroutines

- More flexible, but comes with overhead

Why Memory Matters in Go

Efficient memory use can make your Go programs:

- Run faster

- Use less resources

- Scale better

- Reduce garbage collection pauses

- Improve overall application responsiveness

Let's dive into some practical tips to boost your Go app's performance!

Stack vs Heap: Making the Right Choice

Here's a simple rule: use the stack when you can, and the heap when you must.

When to Use the Stack

- For small, fixed-size variables

- For short-lived data

- When you need quick access

- For value types (int, float, bool, struct)

Example:

func addNumbers(a, b int) int {

result := a + b // 'result' lives on the stack

return result

}

When to Use the Heap

- For large data structures

- For data that needs to be shared between goroutines

- For data with unknown size at compile time

- For interfaces and reference types (slices, maps, channels)

Example:

func createLargeSlice() []int {

return make([]int, 1000000) // Large slice allocated on the heap

}

Tips for Optimizing Go Memory Performance

-

Use Value Types for Small Structs

Instead of:

type Point struct { X, Y int } p := &Point{1, 2}Do this:

type Point struct { X, Y int } p := Point{1, 2} -

Preallocate Slices

Instead of:

data := []int{} for i := 0; i < 10000; i++ { data = append(data, i) }Do this:

data := make([]int, 0, 10000) for i := 0; i < 10000; i++ { data = append(data, i) } -

Use Sync.Pool for Frequently Allocated Objects

var bufferPool = sync.Pool{ New: func() interface{} { return new(bytes.Buffer) }, } func processData(data []byte) { buf := bufferPool.Get().(*bytes.Buffer) defer bufferPool.Put(buf) // Use buf... } -

Avoid Unnecessary Memory Allocations

Instead of:

for _, v := range data { str := fmt.Sprintf("Value: %d", v) // Use str... }Do this:

var str strings.Builder for _, v := range data { str.Reset() fmt.Fprintf(&str, "Value: %d", v) // Use str.String()... } -

Use Pointers Wisely

Use pointers for large structs or when you need to modify the original value:

type BigStruct struct { // Many fields... } func modifyBigStruct(s *BigStruct) { // Modify s... } -

Profile Your Code

Use Go's built-in profiling tools to identify memory bottlenecks:

import "runtime/pprof" // In your main function f, _ := os.Create("mem.prof") pprof.WriteHeapProfile(f) f.Close()Then analyze with:

go tool pprof mem.prof -

Minimize Allocations in Hot Paths

Identify frequently executed code paths and minimize allocations there:

// Before func hotFunction(data []int) int { sum := 0 for _, v := range data { sum += process(v) // Allocates memory on each call } return sum } // After func hotFunction(data []int) int { sum := 0 p := newProcessor() // Allocate once for _, v := range data { sum += p.process(v) // No allocation } return sum } -

Use Value Receivers for Small Methods

For small structs, prefer value receivers to avoid allocations:

type SmallStruct struct { X, Y int } // Efficient: No allocation func (s SmallStruct) Sum() int { return s.X + s.Y } // Less efficient: Allocates a new pointer func (s *SmallStruct) SumPtr() int { return s.X + s.Y } -

Understand Escape Analysis

Go's compiler performs escape analysis to determine whether a variable can be allocated on the stack or must be on the heap. Understanding this can help you write more efficient code:

// Variable 'x' will be allocated on the stack func stackAlloc() { x := 42 fmt.Println(x) } // Variable 'x' will escape to the heap func heapAlloc() *int { x := 42 return &x }You can use the

-gcflags=-mflag to see the compiler's escape analysis decisions:go build -gcflags=-m main.go -

Use Benchmarks to Measure Performance

Always benchmark your optimizations to ensure they're actually improving performance:

func BenchmarkMyFunction(b *testing.B) { for i := 0; i < b.N; i++ { MyFunction() } }Run benchmarks with:

go test -bench=.

Real-World Example: Optimizing a Memory-Intensive Program

Let's optimize a program that processes a large amount of data:

// Before optimization

func processLargeData(data []int) []int {

result := make([]int, 0)

for _, v := range data {

if v%2 == 0 {

result = append(result, v*2)

}

}

return result

}

// After optimization

func processLargeData(data []int) []int {

// Preallocate with an estimated size

result := make([]int, 0, len(data)/2)

for _, v := range data {

if v%2 == 0 {

result = append(result, v*2)

}

}

return result

}

This optimization reduces memory allocations and improves performance, especially for large datasets.

Advanced Techniques for Memory Optimization

-

Custom Memory Allocators

For very specific use cases, you might consider implementing a custom memory allocator:

type Pool struct { buf []byte next int } func (p *Pool) Alloc(size int) []byte { if p.next+size > len(p.buf) { return make([]byte, size) } b := p.buf[p.next : p.next+size] p.next += size return b } -

Memory Mapping for Large Files

When working with large files, use memory mapping to avoid loading the entire file into memory:

import "golang.org/x/exp/mmap" func readLargeFile(filename string) error { f, err := mmap.Open(filename) if err != nil { return err } defer f.Close() // Read data as needed data := make([]byte, 1024) _, err = f.ReadAt(data, 0) return err } -

Zero-Copy Techniques

Implement zero-copy techniques to reduce memory usage and improve performance:

func processBuffer(buf []byte) { // Process the buffer directly without copying } func handleRequest(w http.ResponseWriter, r *http.Request) { buf := make([]byte, 1024) n, _ := r.Body.Read(buf) processBuffer(buf[:n]) }

Conclusion: Balancing Stack and Heap for Peak Performance

Mastering Go performance means understanding when to use the stack and when to use the heap. By following these tips and always thinking about memory usage, you can create faster, more efficient Go applications.

Remember:

- Use the stack for small, short-lived data

- Use the heap for large or shared data

- Profile your code to find bottlenecks

- Optimize allocations and use sync.Pool for frequently used objects

- Benchmark your optimizations to ensure they're effective

- Understand escape analysis and how it affects your code

- Consider advanced techniques like custom allocators and memory mapping for specific use cases

With these strategies, you'll be well on your way to creating lightning-fast Go programs that make the most of both the stack and the heap!

By consistently applying these principles and techniques, you can significantly improve the performance and efficiency of your Go applications. Remember that optimization is an iterative process, and what works best can vary depending on your specific use case. Always measure and profile your code to ensure your optimizations are having the desired effect.